Generating Structured Outputs from Language Models: Benchmark and Studies

Nathan Ranchin

Abstract: Reliably generating structured outputs has become a critical capability for modern LLM applications. Constrained decoding has emerged as the dominant technology across industry, open-source, and research to enforce structured outputs during generation. Despite its growing adoption, a systematic evaluation of constrained decoding remains lacking. Constrained decoding frameworks have standardized around JSON Schema as a structured data format, with most guaranteeing constraint compliance given a schema. However, their effectiveness in practice remains unclear. In this work, we present an evaluation framework to assess constrained decoding approaches across three critical dimensions: efficiency in generating constraint-compliant outputs, coverage of diverse constraint types, and quality of the generated outputs. To facilitate this evaluation, we introduce JSONSchemaBench, a benchmark comprising 10K real-world JSON schemas that encompass a wide range of constraints with varying complexity. We pair this new benchmark with the existing official JSON Schema Test Suite and carefully evaluate six state-of-the-art constrained decoding frameworks, including Guidance, Outlines, Llamacpp, XGrammar, OpenAI, and Gemini. Through extensive experiments, we reveal their capabilities and limitations on structured generation with real-world JSON schemas. Our work provides actionable insights for improving constrained decoding frameworks and structured generation tasks, setting a new standard for evaluating constrained decoding and structured generation. We release JSONSchemaBench at https://github.com/guidance-ai/jsonschemabench.

Introduction

Large Language Models (LLMs) are increasingly deployed as agents that interact with external tools to enhance their capabilities. While these models excel at generating human-like text, ensuring they produce outputs in strictly defined formats remains challenging. JSON Schema has emerged as the industry standard for defining structured outputs, but until now, there hasn't been a comprehensive way to evaluate systems that generate schema-compliant content.

This research introduces JSONSchemaBench, a rigorous evaluation framework that assesses constrained decoding approaches across three crucial dimensions: efficiency, coverage, and output quality. The benchmark includes 10,000 real-world JSON schemas, providing a diverse testing ground for evaluating constrained decoding frameworks.

Understanding Constrained Decoding

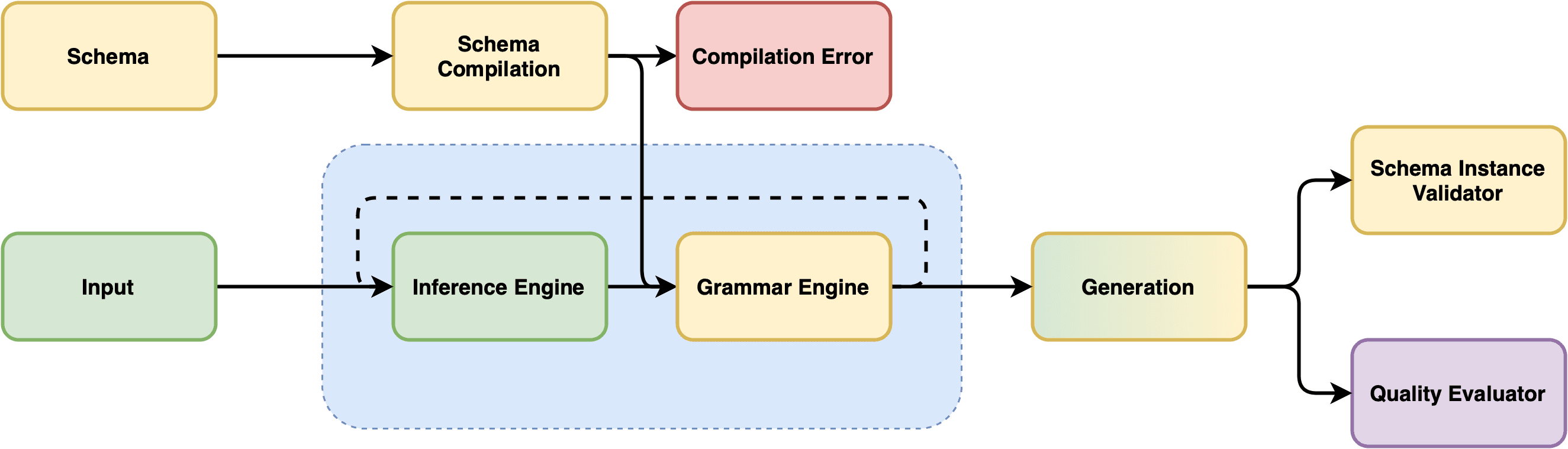

Constrained decoding is a technique that ensures Large Language Models generate outputs conforming to specific structural requirements. It achieves this by actively intervening in the model's generation process, implementing constraints that guide the output without requiring additional training or fine-tuning.

Core Algorithm

The basic constrained decoding process can be formalized as:

where:

- is the output sequence

- is the set of all possible sequences

- is the probability of sequence given input

- is the constraint function that returns 1 for valid sequences and 0 otherwise

Implementation Details

The actual implementation, as shown in Algorithm 1 from the paper, involves several key steps:

def constrained_decode(input_x, constraint_fn C, max_length):

# Initialize empty output sequence

output = []

# Generate tokens until EOS or max_length

while len(output) < max_length:

# Update constraint state with current output

C.update(output)

# Get binary mask of valid next tokens

mask = C.mask()

# Get model logits for next token

logits = model_forward(input_x + output)

# Apply constraint mask to logits

masked_logits = logits * mask

# Sample next token using masked probabilities

probs = softmax(masked_logits)

next_token = sample_token(probs)

# Add token to output

output.append(next_token)

# Check for end of sequence

if next_token == EOS_TOKEN:

break

return output

The process can be represented mathematically for each timestep as:

where is a binary mask that zeroes out probabilities of invalid tokens.

Grammar-Based Constraints

For JSON Schema specifically, the constraints are typically implemented through grammar-based approaches. The process involves:

- Schema Parsing: Convert JSON Schema into a formal grammar

- Grammar Compilation: Transform into an efficient representation

- Dynamic Masking: Use the grammar to compute valid token masks

The grammar can be represented as a 4-tuple:

where:

- is the set of non-terminal symbols

- is the set of terminal symbols (tokens)

- is the set of production rules

- is the start symbol

Optimization Techniques

Modern implementations employ several optimization strategies:

-

Parallel Mask Computation: Computing masks concurrently with LLM forward pass

-

Grammar Caching: Reusing compiled grammars for similar schemas (amortized)

-

Speculative Decoding: Pre-computing masks for likely next tokens

Extended Backus-Naur Form (EBNF)

While EBNF provides great expressiveness for defining complex grammars with time complexity , JSON Schema represents a more constrained grammar subset that can be parsed more efficiently with complexity . This trade-off between expressiveness and efficiency is crucial for practical applications.

The JSONSchema grammar constraints can be represented in EBNF as:

json = object | array | string | number | "true" | "false" | "null"

object = "{" (string ":" json ("," string ":" json)*)? "}"

array = "[" (json ("," json)*)? "]"

This restricted grammar allows for more efficient parsing and validation while still maintaining sufficient expressiveness for most practical use cases.

Key Findings

Efficiency Analysis

The study revealed significant variations in performance across different frameworks:

-

Grammar Compilation Time (GCT):

- Guidance and Llamacpp: Near-instantaneous compilation ()

- Outlines: Higher overhead (3-8s) due to regex-based constraints

-

Time Per Output Token (TPOT):

- Guidance achieved ~50% faster generation compared to baseline

- Llamacpp and Outlines showed slightly lower throughput

Coverage Results

The research evaluated three types of coverage:

- Declared Coverage: Whether a framework can process a schema without errors

- Empirical Coverage: Whether generated outputs comply with the schema

- True Coverage: Whether constraints exactly match the schema's semantics

Key findings showed that Guidance led in empirical coverage across 6 out of 8 datasets, with compliance rates exceeding 90% on most collections.

Quality Impact

Contrary to some concerns, constrained decoding showed positive effects on output quality:

- Average improvement of 3% across all tasks

- Consistent gains even in minimally structured tasks

- No observed negative impacts on semantic accuracy

Practical Implications

The research offers several important insights for practitioners:

-

Framework Selection: Choose based on your primary requirements:

- Need for speed: Guidance

- Maximum compatibility: Llamacpp

- Strict validation: OpenAI/Gemini

-

Performance Optimization: Consider the trade-offs between compilation time and generation speed:

- Coverage Considerations: Account for both declared and empirical coverage when evaluating frameworks for production use.

Conclusion

JSONSchemaBench sets a new standard for evaluating constrained decoding frameworks. The results demonstrate that while current solutions are effective, there's still room for improvement in areas like compilation efficiency and feature coverage. This benchmark provides a foundation for future research and development in structured generation capabilities for LLMs.

The study's findings suggest that constrained decoding not only ensures structural validity but can also enhance the quality of generated content. As LLMs continue to evolve, the ability to reliably generate structured outputs will become increasingly important for real-world applications.

For practitioners, this research provides clear guidance on choosing and implementing constrained decoding solutions, while for researchers, it identifies promising directions for future work in improving these essential tools.